Do you want to develop a macOS application with multi-process architecture where a picture produced in one process should be rendered in a window of another process? What if you need to display not just a static picture, but a 4k full-screen video with 60fps? And you would like not to burn CPU on your client’s Mac ;) If this is what you are looking for, this article is for you.

My name is Kyrylo. I’ve been developing a commercial cross-platform library that allows integrating Chromium web browser control into Java and .NET desktop applications for many years. To integrate Chromium into a third-party application we have to solve a lot of challenging objectives.

One of the most important and ambitious tasks is rendering. Its complexity is connected with the Chromium multi-process architecture. Chromium renders the content of a web page in a separate GPU process and we need to display the produced content in another Java or .NET process. This problem can be described in the following manner: display the graphical content of a process A in a process B:

Sharing rendering result with a distinct process

This task is solved differently on each supported platform. For

example, on Windows and Linux this is done via embedding the Chromium

window into the target application window. This is a common use-case

for the native API: Win32 API’ SetParent

or XLib’ ReparentWindow

functions.

Unfortunately, this approach does not work on macOS. It is forbidden to embed a window from one process into a window from another process. However, there are ways to address this issue.

In this article, I will show how to render the content created

in one process in a window in another process on macOS

using the CALayer sharing approach. The concept will be illustrated

by a simple app.

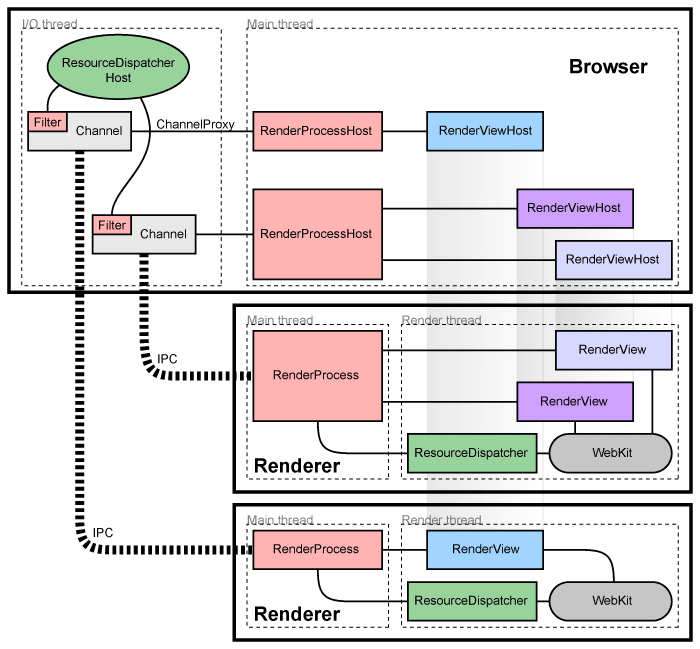

Chromium multi-processing architecture

In this section let’s briefly review the Chromium architecture.

The main idea of Chromium is to use separate processes for displaying web pages. Each process is isolated from others and from the rest of the system.

In the picture below the Browser represents the main process (top-level

window). Renderer is responsible for interpreting and laying out HTML (browser

tab).

This is made for security reasons and makes Chromium similar to the operating system concept: crash in one application (GPU, render process, etc.) does not crash the overall system.

Chromium multi-process architecture (taken from here)

The same concept applies to graphics. Chromium hosts a separate process for GPU-related operations.

Chromium GPU process

Texture sharing

Let’s get back to the original task of getting pixels from a distinct process. Before diving into the implementation details it’s essential to understand what entities are used for rendering in macOS.

A simplified CoreAnimation model

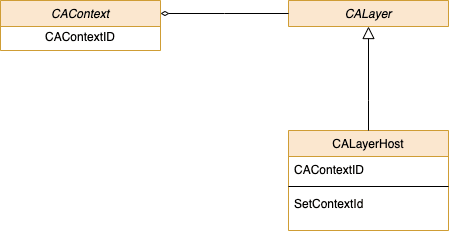

In the picture above you can see the main objects used to display graphic content and relationships between them:

NSWindowrepresents an on-screen window. This class provides an area for embedding other views, accepts and distributes events caused by the user interaction via a mouse and keyboard;UIView— a fundamental building block that renders content within its content bounds. This is a base class for the UI controls such as labels, buttons, sliders;CALayer— an object used to provide the backing store for the views.CALayerinstances can be utilized to display visual content without a parent view as well. Layers are applied for the view customization, e.g, adding radius, shadows, highlighting, etc.

We are interested in CALayer as it provides more flexibility

for the content display. To be exact, we will work with its

subclass — CALayerHost,

which is essential for texture sharing. So what is “texture

sharing”?

Texture sharing can be described as a process of using graphical content created in one process with another process. This is an implementation of the multi-process concept described in this article.

Creating graphical content in one process — displaying in a different one

IOSurface

Apple provides means for the described purpose. It’s

the IOSurface

framework. This API is based upon the IOSurface object that can

be accessed from a remote process via the low-level macOS kernel

primitives called mach_ports. The IOSurface approach is used

in Chromium and we’ve been using it for a long time

in our project. It has a good performance and stable API.

However, after some time we faced an issue that some sites are not

displayed properly when enabling the IOSurface rendering flow

in Chromium. This pushed us to investigate the CALayerHost

approach that is the default

one in Chromium on macOS.

CALayerHost

As I’ve already mentioned, CALayerHost is the CALayer subclass that renders

another layer’s render context. Texture sharing by means of CALayerHost

involves the following entities:

CAContext— a CoreAnimation object that represents the information about the environment and is used forCALayersharing across processes;CALayerHost— aCALayersubclass that can render a remote layer’s content;CAContextID— a globally unique identifier used to identify theCAContext. It is worth mentioning this token can be passed across processes directly whilstmach_portpassing requires additional actions.

Classes required for rendering sharing

Example

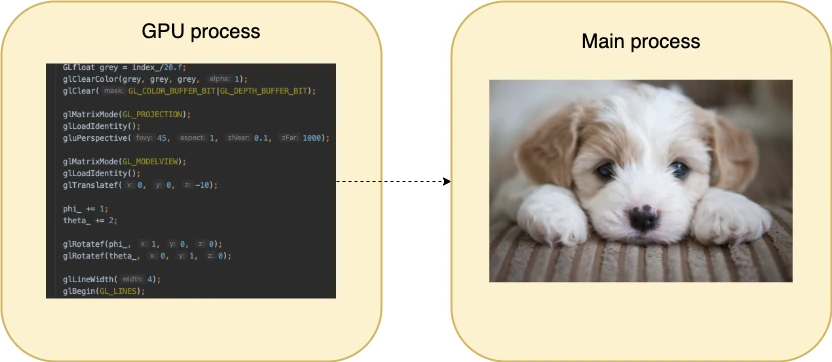

I am going to illustrate the idea of texture sharing with a simple application. This application will be similar to the Chromium structure, i.e, separate processes for different objectives.

The GPU process will do all the drawing stuff using CALayer as a back buffer.

After drawing the CAContextID of this CALayer will be sent to the main

process. The main process will use CAContextID to create CALayerHost objects

for displaying the content on created NSWindow objects.

Implementation details

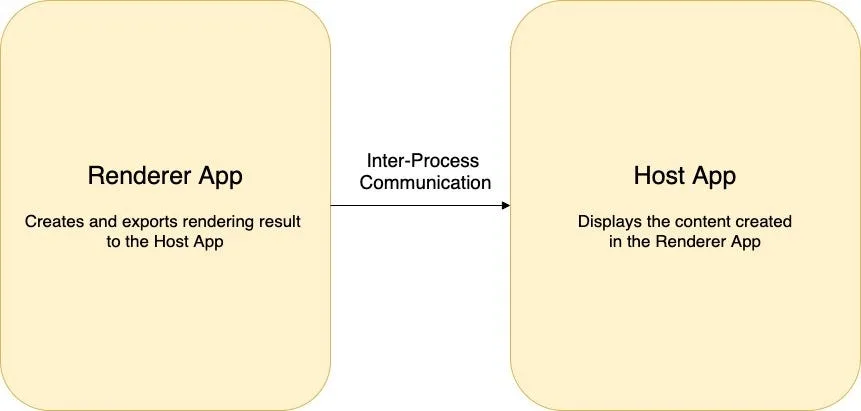

This app consists of two processes:

- Renderer App — responsible for creating texture to share;

- Host App — displays the content created in the Renderer App.

Communication between the processes is made via a simple IPC library.

The sample application architecture

The above structure is an ol’ good client-server approach where Host App makes corresponding requests to Renderer App.

Let’s glance through the significant parts of the application.

The main function subsequently starts both processes. The number of textures to render is configured by a constant variable:

int main(int argc, char* argv[]) {

constexpr int kNumberWindows = 2;

if (ipc::Ipc::instance().fork_process()) {

HostApp server_app;

server_app.run(kNumberWindows);

} else {

RendererApp client_app;

client_app.run(kNumberWindows);

}

}

Declaring necessary API

The CALayerHost class, as well as other objects necessary for layer sharing,

is a runtime API. Thus it must be declared explicitly in our code.

// The CGSConnectionID is used to create the CAContext in the

// process that is going to share the CALayers that it is rendering

// to another process to display.

extern "C" {

typedef uint32_t CGSConnectionID;

CGSConnectionID CGSMainConnectionID(void);

};

// The CAContextID type identifies a CAContext across processes.

// This is the token that is passed from the process that is sharing

// the CALayer that it is rendering to the process that will be

// displaying that CALayer.

typedef uint32_t CAContextID;

// The CAContext has a static CAContextID which can be sent to

// another process. When a CALayerHost is created using that

// CAContextID in another process, the content displayed by that

// CALayerHost will be the content of the CALayer that is set as the // layer| property on the CAContext.

@interface CAContext : NSObject

+ (id)contextWithCGSConnection:(CAContextID)contextId options:

(NSDictionary*)optionsDict;

@property(readonly) CAContextID contextId;

@property(retain) CALayer *layer;

@end

// The CALayerHost is created in the process that will display the

// content being rendered by another process. Setting the

// |contextId| property on an object of this class will make this

// layer display the content of the CALayer that is set to the

// CAContext with that CAContextID in the layer sharing process.

@interface CALayerHost : CALayer

@property CAContextID contextId;

@end

Renderer App

The Renderer App accomplishes two main tasks:

- Renders content by means of the OpenGL API. This is done in

the

ClientGlLayerclass that inherits from theCAOpenGLLayer; - Exposes the newly created layer to the Host App by means of the

CAContextand the IPC library.

void RendererApp::exportLayer(CALayer* gl_layer) {

NSDictionary* dict = [[NSDictionary alloc] init];

CGSConnectionID connection_id = CGSMainConnectionID();

CAContext* remoteContext = [CAContext

contextWithCGSConnection:connection_id options:dict];

printf("Renderer: Setting the CAContext's layer to the CALayer

to export.\n");

[remoteContext setLayer:gl_layer];

printf("Renderer: Sending the ID of the context back to the

server.\n");

CAContextID contextId = [remoteContext contextId];

// Send the contextId to the HostApp.

ipc::Ipc::instance().write_data(&contextId);

}

The code of sharing the CALayer is straightforward:

- Initialize the

CAContext; - Expose the layer for export via the

CAContext::setLayer()method; - Pass the identifier of

CAContextto the Renderer App via the IPC lib. There it will be used to create aCALayerHostinstance.

Host App

The main goal of the Host App is to display the content rendered in Renderer

App. Each layer is served by a separate NSWindow. Primarily we need to

initialize a CALayerHost with a CAContextID received from the Renderer App

process:

CALayerHost*HostApp::getLayerHost() {

if (context_id_ == 0) {

ipc::Ipc::instance().read_data(&context_id_);

}

CALayerHost* layer_host = [[CALayerHost alloc] init];

[layer_host setContextId:context_id_];

return layer_host;

}

For convenience, we will save the received id into the context_id field. Then,

the CALayerHost must be embedded into the NSView of a newly created window:

[window setTitle:@"CARemoteLayer Example"];

[window makeKeyAndOrderFront:nil];

NSView* view = [window contentView];

[view setWantsLayer:YES];

CALayerHost* layer_host = getLayerHost();

[[[window contentView] layer] addSublayer:layer_host];

[layer_host setPosition:CGPointMake(240, 240)];

printf("Host: Added the layer to the view hierarchy.\n");

Important: the setWantsLayer property must be set to YES, so the view

would use a CALayer to manage its rendered content.

Below you can see the app result for two windows. Note that each window uses

the same CALayer in the Render App.

Running the application with two windows

You can find the complete source code of the example app with the build instruction here.

Conclusion

Putting the GPU rendering into a separate process is a popular

approach used in such a big project as Chromium. This improves

the overall product safety and improves code maintainability. In this

article, we tested the approach based on CALayer sharing. The main

idea of the described topic is to have a process that

performs drawing on a CALayer and shares the final layer for another

process(es) that can display the rendered layers in the target views.

Sending…

Sorry, the sending was interrupted

Please try again. If the issue persists, contact us at info@teamdev.com.

Your personal JxBrowser trial key and quick start guide will arrive in your Email Inbox in a few minutes.