In this article, we demonstrate how to implement screen sharing between two Compose Desktop applications using the capabilities of JxBrowser.

JxBrowser is a cross-platform JVM library that lets you integrate the Chromium-based web browser control into your Compose, Swing, JavaFX, SWT apps, and use hundreds of Chromium features. To implement screen sharing in Kotlin, we take advantage of Chromium’s WebRTC support, and JxBrowser’s programmatic access to it.

Overview

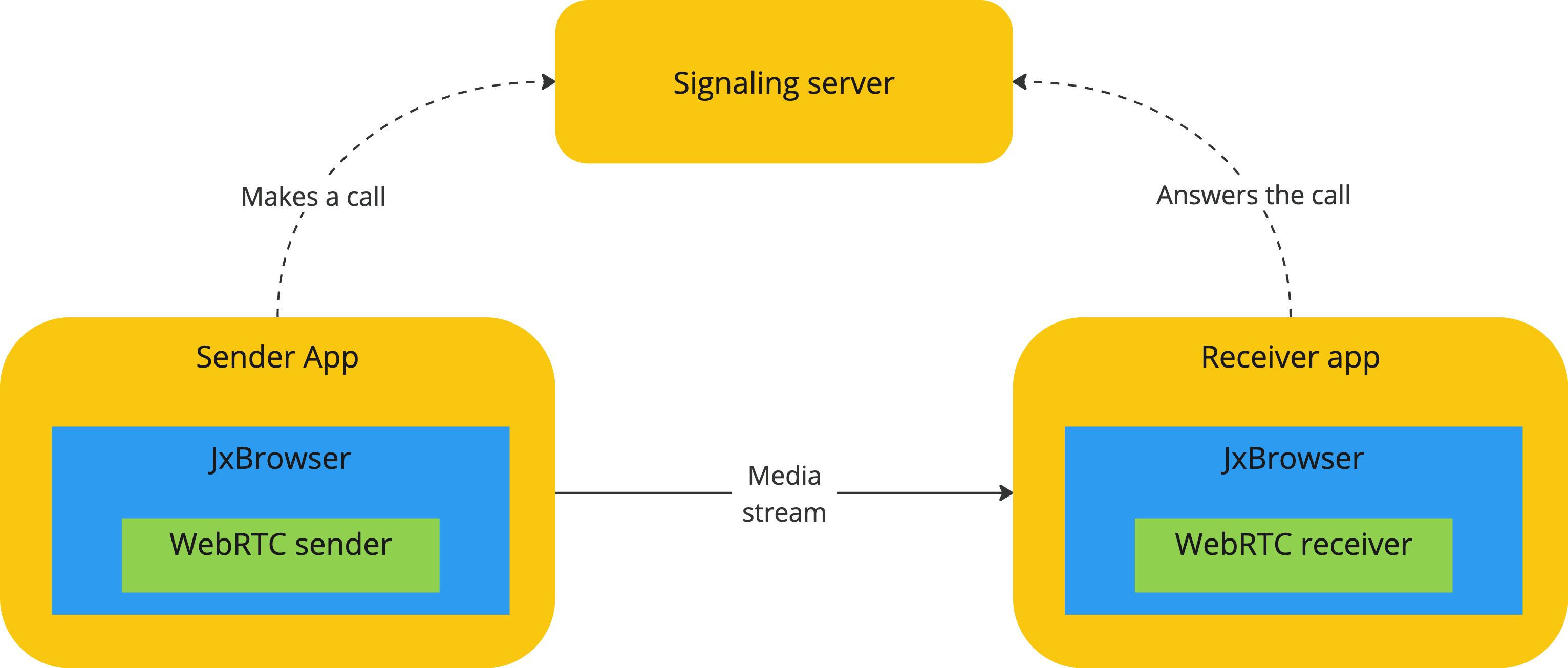

WebRTC is an open standard, which allows real-time communication through a regular JavaScript API. The technology is available on all modern browsers, as well as on native clients for all major platforms. We will use it to send the video stream of the captured screen from one app to another.

The project consists of four modules:

server– a signaling server for establishing direct media connections between peers.sender– a Compose app that shares the primary screen.receiver– a Compose app that shows the shared screen.common– holds a common code shared between the Kotlin modules.

The signaling server facilitates the initial exchange of connection information between peers. This includes information about networking, session descriptors, and media capabilities.

Compose clients are two desktop applications: one that shares the screen with a single click, and another that receives and displays the video stream.

Server

One of the primary challenges with WebRTC is managing signaling, which serves as a rendezvous point for peers, without transmitting actual data. We use PeerJS library that abstracts the signaling logic, allowing us to concentrate on the app’s functionality. The library provides both server and client implementations.

All we need to do is create an instance of PeerServer and run the created

Node.js app.

To start, we add the required NPM dependency:

npm install peer

Then, create an instance of PeerServer:

PeerServer({

port: 3000

});

And run the created application:

node server.js

Compose clients

For Compose apps, we need to initialize an empty Gradle project with JxBrowser and Compose plugins:

plugins {

id("org.jetbrains.kotlin.jvm") version "2.0.0"

id("com.teamdev.jxbrowser") version "2.0.0"

id("org.jetbrains.compose") version "1.6.11"

}

jxbrowser {

version = "8.17.1"

includePreviewBuilds()

}

dependencies {

implementation(jxbrowser.currentPlatform)

implementation(jxbrowser.compose)

implementation(compose.desktop.currentOs)

}

Each Compose client consists of three layers:

- UI layer – Compose’s

singleWindowApplication, which displays the interface. - Browser integration layer – JxBrowser library, which embeds the browser into the Compose application.

- WebRTC component – PeerJS library, which establishes WebRTC connections and handles a media stream for screen sharing.

Receiver app

Let’s start with the receiver app that shows the shared screen.

We need to implement a receiving WebRTC component. It should connect to the

signaling server and subscribe to incoming calls. Its API will consist of a

single connect() function. The function should be exposed to the global scope

to be accessible from the Kotlin side.

window.connect = (signalingServer) => {

const peer = new Peer(RECEIVER_PEER_ID, signalingServer);

peer.on('call', (call) => {

call.answer();

call.on('stream', (stream) => {

showVideo(stream);

});

call.on('close', () => {

hideVideo();

});

});

}

We answer each call and show the received stream in <video> element, otherwise

the element is hidden. A link to a full source code on GitHub is provided at the

end of the article.

Then, we will use JxBrowser to load this component and make the exposed JS functions invokable from Kotlin:

class WebrtcReceiver(browser: Browser) {

private const val webrtcComponent = "/receiving-peer.html"

private val frame = browser.mainFrame!!

init {

browser.loadWebPage(webrtcComponent)

}

fun connect(server: SignalingServer) = executeJavaScript("connect($server)")

// Executes the given JS code upon the loaded frame.

private fun executeJavaScript(javaScript: String) = // ...

// Loads the page from the app's resources.

private fun Browser.loadWebPage(webPage: String) = // ...

}

WebrtcReceiver loads the implemented component from the app’s resources into

the browser and exposes a single public connect(...) method, which directly

invokes its JS counterpart.

Finally, let’s create a Compose app to put all the pieces together:

singleWindowApplication(title = "Screen Viewer") {

val engine = remember { createEngine() }

val browser = remember { engine.newBrowser() }

val webrtc = remember { WebrtcReceiver(browser) }

BrowserView(browser)

LaunchedEffect(Unit) {

webrtc.connect(SIGNALING_SERVER)

}

}

First, we create Engine, Browser, and WebrtcReceiver instances. Then, we

add BrowserView composable to display the HTML5 video player. In the launched

effect, we connect to the signaling server, supposing it is a quick, non-failing

operation.

Sender app

In the sender application, we share the primary screen by making a call to the receiver.

We need to implement the sending WebRTC component. It should be able to connect to the signaling server, start and stop a screen sharing session. Thus, its API will consist of three functions. These functions should be exposed to the global scope, so that they are accessible from the Kotlin side.

let peer;

let mediaConnection;

let mediaStream;

window.connect = (signalingServer) => {

peer = new Peer(SENDER_PEER_ID, signalingServer);

}

window.startScreenSharing = () => {

navigator.mediaDevices.getDisplayMedia({

video: {cursor: 'always'}

}).then(stream => {

mediaConnection = peer.call(RECEIVER_PEER_ID, stream);

mediaStream = stream;

});

}

window.stopScreenSharing = () => {

mediaConnection.close();

mediaStream.getTracks().forEach(track => track.stop());

}

After the connection to the signaling server is established, the component can start and stop a screen sharing session. Starting a new session implies choosing a media stream with which to call the receiver. Both the stream and P2P media connection should be closed when the sharing is stopped. The connection to the signaling server is kept alive as long as the component is loaded.

Similarly to the receiving app, let’s create a Kotlin wrapper for this

component. We will extend WebrtcReceiver with new functionality to create

WebrtcSender.

Firstly, we need to tell JxBrowser which video source to use. By default, when a web page wants to capture video from the screen, Chromium displays a dialog where we can choose a source. With JxBrowser API, we can specify the capture source directly in the code:

init {

// Select a source when the browser is about to start a capturing session.

browser.register(StartCaptureSessionCallback { params: Params, tell: Action ->

val primaryScreen = params.sources().screens()[0]

tell.selectSource(primaryScreen, AudioCaptureMode.CAPTURE)

})

}

Secondly, we would like to let our UI know whether there’s an active sharing

session now. It is needed to decide whether to show the Start or Stop

button. Let’s create an observable property and bind it

to CaptureSessionStarted and CaptureSessionsStopped JxBrowser events:

var isSharing by mutableStateOf(false)

private set

init {

// ...

// Update `isSharing` state variable as a session starts and stops.

browser.subscribe<CaptureSessionStarted> { event: CaptureSessionStarted ->

isSharing = true

event.capture().subscribe<CaptureSessionStopped> {

isSharing = false

}

}

}

The last thing to do is to add two more public methods that call its JavaScript counterparts:

fun startScreenSharing() = executeJavaScript("startScreenSharing()")

fun stopScreenSharing() = executeJavaScript("stopScreenSharing()")

That’s it!

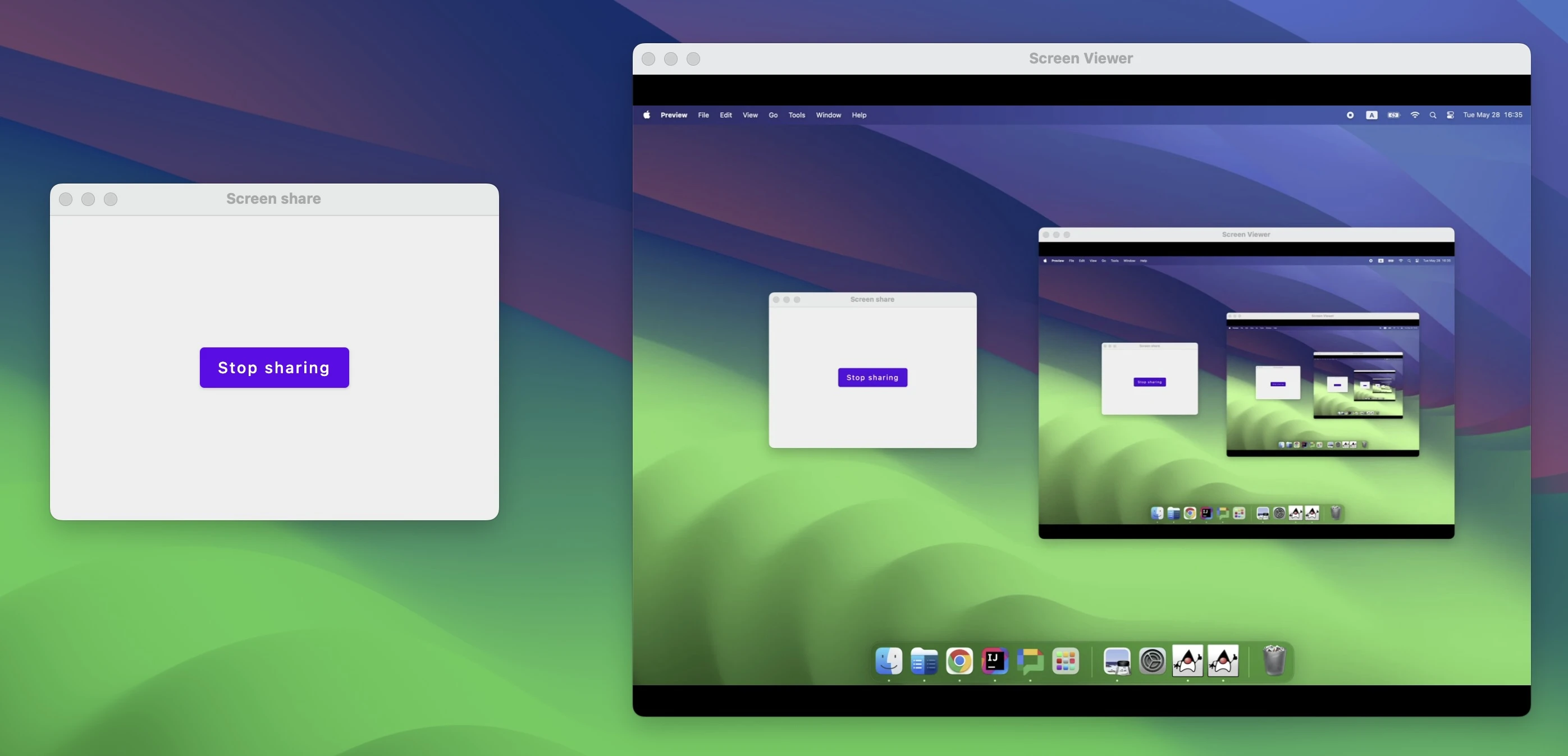

When running locally, the application looks like this:

Source code

Code samples are provided under the MIT license and available on GitHub. You can start the server and both Compose applications by executing the commands below. For convenience, you may do it in individual terminal sessions.

./gradlew :compose:screen-share:server:run

./gradlew :compose:screen-share:sender:run

./gradlew :compose:screen-share:receiver:run

Running on different PCs

As a bonus, you can easily make this example work on different PCs without exposing a locally-running signaling server. PeerJS offers a free cloud-hosted version of PeerServer. The library automatically connects to the public cloud server if not given a particular server to use. Please note, it is operational at the moment of writing, but its uptime is not guaranteed.

To try it out, you need to remove an explicit passing of the server to Peer constructor in both sending and receiving WebRTC components:

// Use the server.

const peer = new Peer(RECEIVER_PEER_ID, signalingServer);

// Use the cloud-hosted instance.

const peer = new Peer(RECEIVER_PEER_ID);

Also, be aware that you will be sharing this public server with other people, and peer IDs may collide because they are set manually.

Conclusion

In this article, we have demonstrated how to share the screen in one Compose application and display the video stream in another one using JxBrowser and WebRTC. By leveraging JxBrowser’s integration of Chromium, and the PeerJS library for WebRTC, we can build a functional screen sharing application in no time.

Sending…

Sorry, the sending was interrupted

Please try again. If the issue persists, contact us at info@teamdev.com.

Your personal JxBrowser trial key and quick start guide will arrive in your Email Inbox in a few minutes.